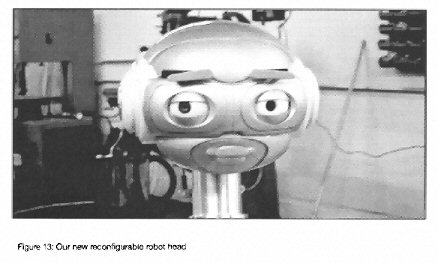

While theories of the “uncanny valley” are debatable (see Hanson’s “Upending the Uncanny Valley” (.pdf)), the quest for human-like androids and automatons continue to compel their designers. At Carnegie-Mellon University’s anthropomorphism.org, I found an interesting early study of robot head design that shows how these designers sometimes make choices about when to make robots anthropomorphic (human-like), and when to avoid such resemblance.

In “All Robots Are Not Created Equal,” Carl F. DiSalvo (et. al, 2002) analyzes the human perception of the humanoid robot head in alarming detail, from the length between the top of the head and the brow-line, to the diameter of the eyeball, to the distance between pupils. The researchers want to know: how human should a robot head be, and is this contingent upon the context in which they are employed? Their study suggests that eyes, mouth, ears and nose — in that order — seem to be the most important traits for us to perceive the “humanness” in a machine. But the most interesting conclusion they draw, in my view, is that the more servile and industrial the robot, the less we want to perceive its resemblance to us. Thus, not all robots are created equal: “consumer” robots often are purposely more “robotic-looking” (mechanical) in design, since they often perform servitude and routine functions that would crush the spirit of any real human, while others — especially “fictional” — robots are often the most human-like of all, reflecting our projected fantasies for them as “characters.” DiSalvo and crew propose that the following elements of robot design would create the ideal “human-like” robot:

1. wide head, wide eyes

2. features that dominate the face

3. complexity and detail in the eyes

4. four or more features

5. skin

6. humanistic form language

To what degree is our notion of the “double” located on the head, the face and its various features? Freud’s classic itinerary of uncanny traits include doll’s eyes and language, and I would suggest that the more the traits listed above appear in a doppelganger, the more uncanny that double might be. Moreover, the role of the uncanny valley is at work here, and while this theory is not directly addressed in DiSalvo’s article, it’s worth considering the degree to which the factor of increasing “likeness” in robot head design follows the x-axis of the classic uncanny valley:

It is useful to consider not only the “uncanny” in this chart, but the way that that assumptions about use value and instrumentality lie behind its structure. There is a politics of self/othering at work in this schema that is rarely discussed. One of the fundamental principles of the Uncanny as it is classically understood in aesthetics is that, symbolically, the “double” is a harbinger of death for the subject that perceives it. This is a complicated notion, but on one level what this means is that when the self perceives itself as disembodied and located in another entity — through its mirror image — we unconsciously recognize how “replaceable” we are and this is felt as uncanny. We do not only respond, typically, with fear: we also feel compelled to separate the Self from the Other as a form of protection against the threat that the Other presents. A power relationship transpires: the psyche construes a hierarchical separation that institutes the Self in a higher subject position than the Other, in order to retain its sense of mastery over identity. The Other is subjugated into a lower position, often one that is loathed or considered repulsive. While such Othering is “harmless” in fiction, this is also a dream that reproduces the politics of everyday life.

There is a generalized fear of robots and other forms of artificial intelligence “replacing” mankind; we see it everywhere in science fiction, but it is also a very real threat to the labor force. Robot design participates in a self/othering dynamic that domesticates these anxieties. Could the uncanny valley be a symptom of class conflict as much as some organic reaction formation? I think so.

On a lighter note, test these theories against the Life magazine photogallery, “Robots We Fear, Robots We Like”.

4 comments